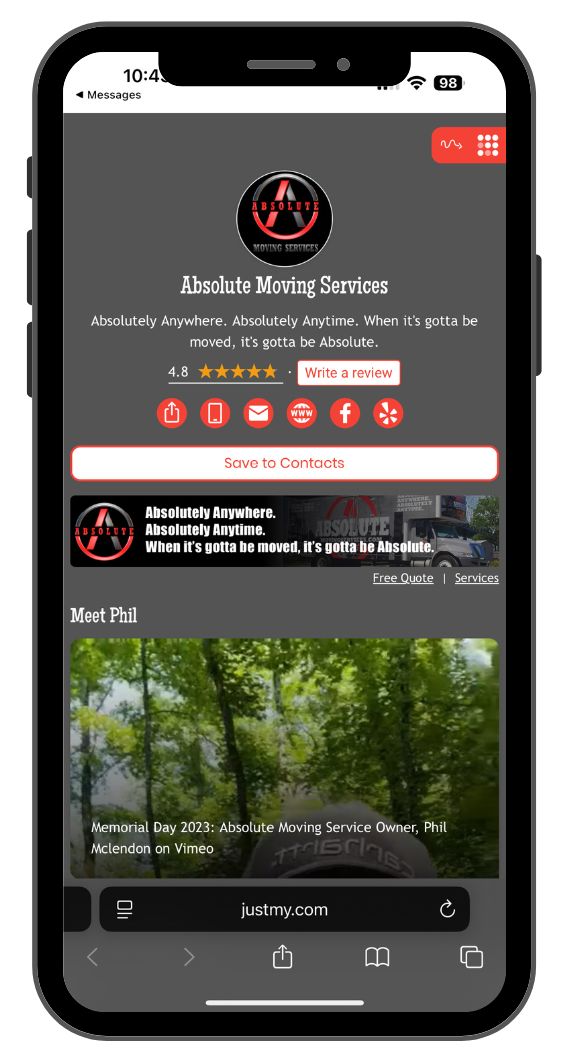

Market:

Select your city